Demystifying AI in Software Development: Rocketmakers Approach and What It Means for our Clients

Software development can be a notorious blend of innovative tools with the power to propel your business forward, and an ever rotating conveyor belt of the latest trends/fads, which may have little to no application to you or your business domain. As AI is having its moment in the sun (again) you may be asking yourself: how, if at all, should I be using it in my business?

At Rocketmakers, we are choosing to embrace the power of AI to drive innovation, efficiency, and quality in our output. As a software agency building apps, websites, and SaaS solutions for a variety of clients, we constantly explore new ways of validating, and potentially leveraging, the latest tech in our day-to-day work. In this blog, I wanted to demystify the use of AI in software development by sharing our current thoughts and approach, whilst also shedding light on what it means for our clients.

The AI "Seat"

We recently took the step to create a dedicated AI “seat” within our business, one of many such tech seats within Rocketmakers. These usually take the shape of open, fortnightly meetings run by one of our more experienced developers and serve as a jump off point to:

- discuss important updates in the industry

- share any new resources (blogs, youtube videos, product releases etc.)

- plan future work ensuring it fits with the company goals

- conduct small-scale tests into potential new process improvements

- raise any concerns around shifts in the industry/ecosystem

The seats serve as a collaborative hub where we can engage in healthy debates about our current approach and explore new directions to implementing available tools across the business. This culture of continuous learning and improvement helps us stay at the forefront of emerging tech, ensuring in the long run that our clients also benefit from any advances we make as a result.

What Tools are Available to Developers and How are They Being Used?

There are many areas where AI-powered tools, plugins or platforms are supporting developers in their coding. I’ve reduced these to three groups:

Code Generation, Completion or Suggestion

Personally I feel this is the area where most gains are currently being made.

As developers, much of the code we write follows regular patterns, which historically we have had to copy, paste and update whenever it needed repeating. Recently there have been major advances in tools such as Github Copilot and Tabnine, which are built on top of our usual code editors. These tools essentially work by scanning your existing code and making informed suggestions for what may come next (think of the auto-complete on your smartphone), which can vastly reduce the time taken to write a new feature, service etc.

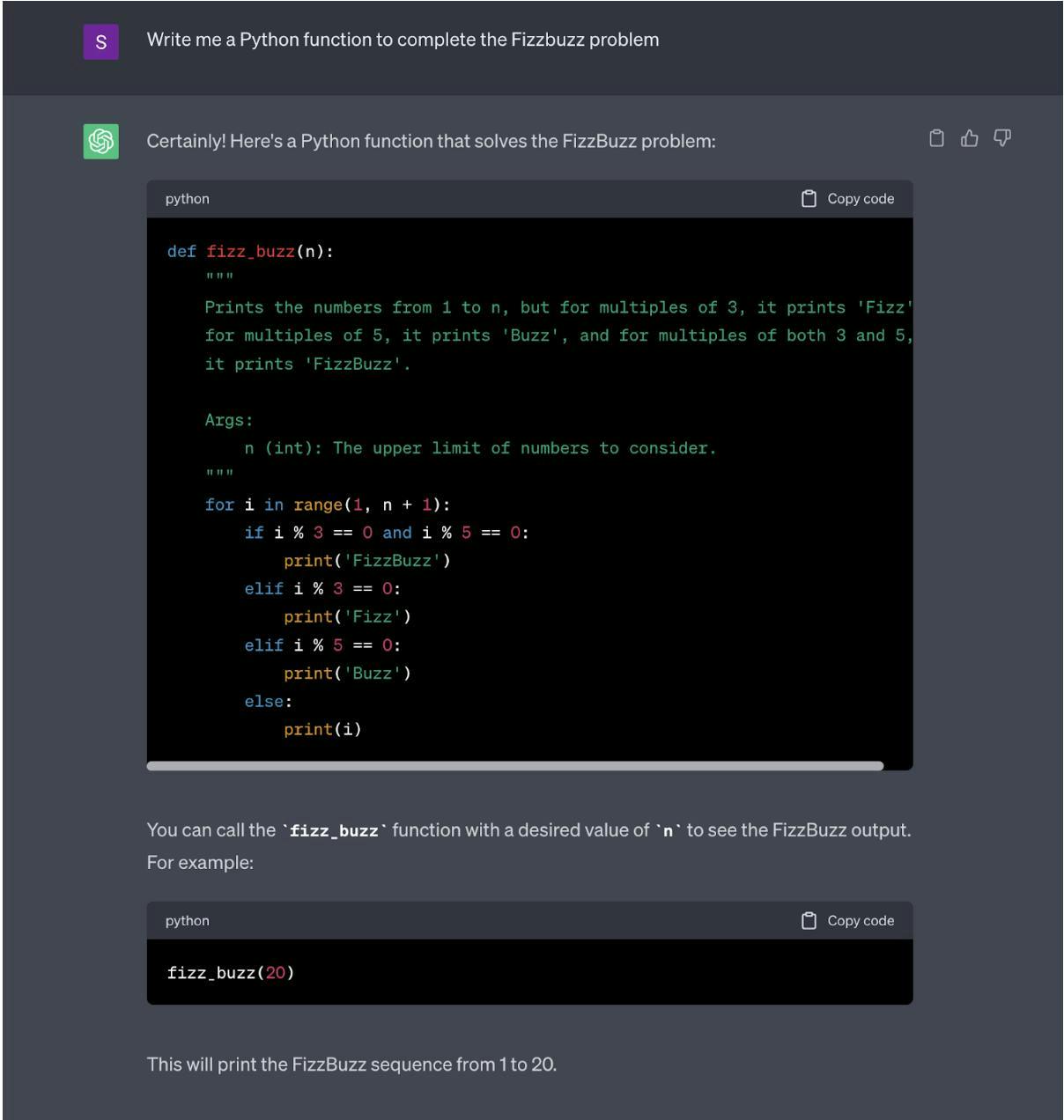

One application of rapidly improving large language models (LLMs) such as ChatGPT is to generate simple code chunks. This could be utility functions (“write a function that takes in a date and returns it in a different format”), adding basic documentation (“write some docs to describe the purpose and usage of this function”) or simple test cases (“write unit tests to cover this function“).

Code Comprehension and Translation

Unfortunately as developers we regularly have to interact with complex and hard to interpret codebases, systems and architectures. This could be because we’re interacting with a legacy system or perhaps the original creators were under significant time or fiscal pressures. As an agency we regularly encounter this issue when inheriting code from new clients. What this means to the client is time and money spent on developers interpreting existing code.

Another use of GPT tools is to help us understand a particular code snippet or system that we’re struggling with. This could be as simple as feeding in a snippet along with the prompt “Can you explain what the following function does?”.

Another frequently encountered problem as an agency developer is outputting code in a language you have less experienced with. For example, I might primarily be a JavaScript/Typescript developer but may be asked to write some Python on my current project. If I am not particularly confident in Python, this task could take considerably longer. I may need to find another developer with more Python knowledge and ask them about their approach and any potholes I may hit. I might need to research the syntax to write elements I would already know how to write in JavaScript.

ChatGPT offers a number of shortcuts here.

I could begin by asking ChatGPT to explain the existing Python code I need to interact with, improving my understanding of the problem. Next, I could potentially write the function in TypeScript before asking ChatGPT to convert it to Python, or even ask ChatGPT to generate the whole function in Python to begin with. In either case, the time taken to produce something functional before I ask a developer with more domain knowledge to review it (more on this later) has been significantly reduced.

Code Quality

Quality Assurance (QA) is an area where many developers are utilising AI-powered tools already. Tools like DeepCode and CodeClimate (built on top of AI for static code analysis) read codebases, detect potential issues, and provide suggestions for improvements. They can identify code smells, security vulnerabilities, performance bottlenecks, or other areas to refactor.

In the previous section’s example, we could also use GPT tools to suggest refactoring opportunities or code improvements. Perhaps I haven’t written Python for a number of years and I might ask it to review a function in case there are more concise, clean or efficient ways to write it given the language has moved on.

Developer Experience

An improved developer experience is one of the big gains we are seeing from implementing these tools in our day-to-day. Life as an agency software developer can be incredibly exciting, working with cutting edge tech and delivering cool projects you’re genuinely invested in. The bit people talk about less is how much of the job is still spent on relatively mundane tasks. Writing short, simple tests. Adding documentation to existing code. Upgrading dependency versions. Many of the tools described above help to reduce the time and workload associated with these tasks for developers. In reality what we are talking about is reducing the inevitable grunt work that is inextricable from the job we love.

Another outcome of the AI seat is that we gain a better understanding of which tools are already being used in the wider industry, and the impact this is having. We recently shared a report by Github internally where they surveyed 500 enterprise developers on how frequently AI tooling is being used to support their coding and how that is affecting the developer experience. Although I’d definitely suggest giving the above article a look, the TLDR (too-long-don’t-read) is that almost all the devs interviewed (92%) are regularly using AI tooling and 87% said that tools such as Copilot helped them preserve mental effort while completing more repetitive tasks.

Not only is this great news for us as developers but it has a huge knock on effect for our clients. Obviously it reduces time to delivery (or frees up more budget for the feature work product owners want to see) but it means that the developers on your project will be more engaged, motivated and will find it easier to interact with the codebase when they roll on/off.

Our Current Approach

Within the Rocketmakers developers team, the main feeling is that unlike many overly-hyped or zeitgeist-y technologies, AI is not going anywhere. Although it is not going to replace our jobs entirely (anytime soon at least), it may well reshape what they look like and where we focus our time. This means that if we don’t begin testing and adopting some of these tools then not only won’t we be ahead of the curve, but we actually risk falling behind.

Given that this is fairly new territory we are taking a considered and, where we can, measurable approach to including AI tooling in our project delivery. For example we are currently running a trial for Copilot with a small number of developers so we can gather both objective and subjective data before potentially rolling it out to the entire developer team.

One area we are putting particular focus on is code quality. Our CI/CD pipelines run code quality checks using CodeClimate so that if we ever push anything that reduces the overall quality of our codebase then we can immediately see and address this. Many of our developers are already using ChatGPT to add basic documentation, create simple unit tests or suggest edge cases when we are building out test suites for functions or services.

As with all areas of the business, we are striving to upskill those who are interested and passionate. In the outside world, free resources are released daily. Google recently added a cloudskill course for those looking to understand the higher level concepts underpinning many of these tools.

With any new technology there are also potential risks and potholes that have been raised within the AI seat discussions.

Reservations and Caveats

One concern we have is around privacy and security of the code we write for our clients. Assistive tools such as Copilot work by learning from codebases it has previously encountered and, critically, from the style and implementations within yours. This raises the question: what happens when we allow our/client codebases to become part of the tool’s source data? Should we allow this? What if our codebase includes secret values such as API keys?

Thankfully both Tabnine and Copilot have paid business tiers that allow us to keep the data within the Rocketmakers organisation. We are currently undergoing short trials to decide which pair programming tool best fits our needs, our clients needs and conforms to our privacy requirements.

Another concern that many people have is that generative code tools may lead to a drop in quality of output. The thought runs as follows: a developer asks tool X to produce function Y, they copy/paste the output into the codebase and seeing that it works, they move on with their life. This could potentially introduce poor quality or even malicious code into our codebase.

As our reliance on this type of tool increases, this concern carries more weight.

However this is no different to using code found online when we troubleshoot problems daily. Whether we are using code from ChatGPT or Stackoverflow (think wikihow for devs) we should follow the same steps:

- Do not use any code you don’t understand!

- Review and update the code so it fits with your/our coding style and standards

- Run all tests and code quality checks after adding the code

- Ask another developer for a review before deploying

The take home message here is that if we cut corners, mistakes will happen. The more rigorous our QA process is, the better and safer our output will be. Introducing assistive AI tools does not change this.

In summary…

Utilising AI tools to assist software developers is already an established paradigm, whether to generate code, check its quality or free up time for more creative problem solving and higher level conceptual work. Although it needs to be paired with an informed and considered approach, this is only going to become more commonplace as these tools increase in maturity and be feature rich. For clients of a software agency implementing these technologies and practises, this promises to improve: project efficiency, overall code quality and the engagement and motivation of your developer team.